Ensuring Flexible Evaluation as Project Needs Change

Designing and implementing an effective public health program requires completing multiple activities, including identifying stakeholders and participants, completing a comprehensive needs assessment, or utilizing a clear theory of change with measurable and specific aims to address progress. No matter how much time and effort you may spend on these activities, factors beyond your control may require changes in program activities or delivery. As programmatic content shifts organically alongside changing program needs, so too must evaluation strategies. By being flexible, you can be sure to evaluate the new program activities, as well as those you had planned on evaluating from the outset. And, you can better understand why these changes occurred in the first place and the impact they had on the project in real-time.

Interested in learning more? We’re sharing four strategies on creating flexible evaluation processes for greater program success and a clear understanding of program outcomes.

1. Make your logic model a “living document”

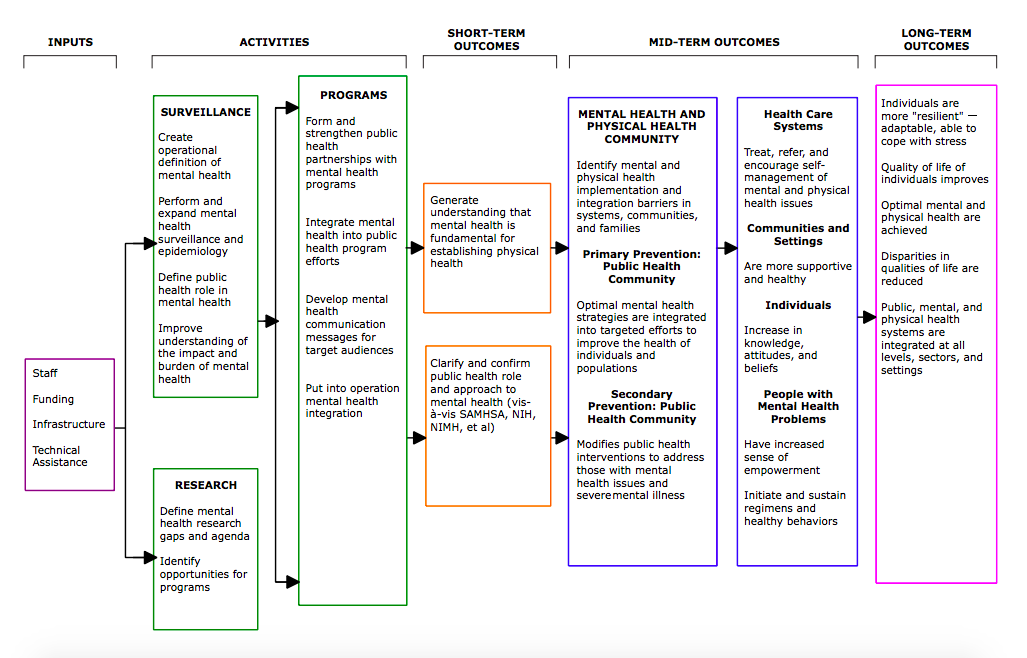

A logic model is a graphic depiction that presents the shared relationships among the resources, activities, outputs, outcomes, and impact for your program. They are essential in illustrating how goals and outcomes will be achieved through program activities and are valuable tools for determining the direction of your program. Logic models are often developed during the early phases of program development, but they should not be set in stone. Aspects of your logic model should continuously evolve as you catalogue and institutionalize changes that occur within your program. A valuable way to ensure that your logic model remains accurate is by building in regular intervals to review your model with project staff, funders, and program participants. In doing so, your team will have the opportunity to timely reflect on the status of the program activities and goals established in your model.

Additionally, allowing ongoing edits to your logic model is a useful way to track changes. But if you make changes to your model, be sure to preserve previous versions. You can utilize previous versions to assist in explaining to funders and other entities how your program shifted along the way.

Draft of a logic model for integrating mental health into chronic disease prevention and health promotion.

2. Build in regular “pulse-checks” during programmatic activities

Throughout the implementation of a program, set aside time to engage with project creators and implementors about whether they are meeting the goals that were originally laid-out in the logic model. One simple way to receive updates from your implementation team is by administering a simple one to two question survey quarterly. Responses from the survey then allow your evaluation team to stay proactive and aware of program changes before and as they occur. These check-ins can also help evaluators adjust evaluation activities in response to program changes.

3. Elicit qualitative feedback from those involved in all aspects of your program

Formal or informal feedback from program participants, staff, and funders can supplement pre-existing data collection touchpoints. By including the voices of all those related to a program as a fixed evaluation activity, you can ensure that priorities align across the board and more proactively guide changes to program delivery.

How you elicit feedback and updates on the status of program depends on who you are addressing. For example, participants may be more willing to speak up in a group setting through a focus group, while program staff or a funder may prefer a one-on-one setting through a key-informant interview. Make sure to be deliberate in who you are including in qualitative touchpoints to maximize feedback from every sector of your program.

4. Cultivate a “culture of evaluation”

Building a “culture of evaluation” within your program helps ensure a flexible evaluation plan because it allows for more even feedback between evaluators and program staff and continuous awareness of the ongoing outcomes of the program. Building internal capacity around evaluation activities with program staff allows for all the steps identified above to flow more smoothly.

Building internal capacity requires teaching project staff about the importance of evaluation and understanding that evaluation doesn’t just occur at the end of a project. Tools to help evaluation, such as assisting with programmatic tracking processes or by providing technical assistance around data related concerns, can also help build staff’s capacity.

Include as many voices as possible in making decisions about flexible evaluation processes to establish a clear and consistent understanding of the importance of ongoing evaluation.

Even the best laid plans for evaluation can hit a snag in the road as program delivery occurs in the real world and is subject to factors beyond an evaluator’s control. As evaluators, creating a flexible evaluation process can help to stay on top of big programmatic changes as well as capture promising new activities.

Looking for guidance on conducting a needs assessment? The tips in this article will ensure that your needs assessment planning, analysis and subsequent actions are efficient and effective.